Outliers deviate from the norm—significantly enough to give marketers pause. But outliers can tell us more about our data, how we gather it, and what is in it, if we examine the entire data set carefully with our marketing goals in mind. Dealing with data outliers requires a careful consideration of a number of statistical methods, and is no task for data debutantes, but it is well worth the effort because of the impact outliers have on data results and action taken based on those results.

In July 2010, The New York Times concluded “you can expect an average [investment] return to be your average experience. Returns either greater or less than the average are less and less likely as you move further and further from the average. At extreme tips of this nice little bell are positive or negative returns that are so unlikely to happen that they are thought to be almost statistically impossible.” The newspaper went on to add that outliers are, “something that is so unlikely that it is thought to be unrepresentative of the rest of the sample. In this case, these outliers generate returns that, according to the theory, we are almost never supposed to see.”

Identifying Outliers

Anomalies are part and parcel of the analyst’s daily agenda. At least 5% of data even in a reasonably high quality data set will likely contain anomalies—odd as these data might be, it is more peculiar not to find them than to identify them.

More formally defined, an outlier is an observation that lies an abnormal distance from other values in a random sample from a population.

The challenge of outliers is that they can significantly distort research results. Consider the impact of an outlier in this hypothetical example. A researcher analyzed the number of cups of coffee college students typically consumer each day. In a sample of 30 students, 12 students consumed 1 cup per day, 13 students consumed 2 cups, 2 students drank 3 cups, 2 drank 4 cups, 1 student drank no coffee—and one student drank 100 cups per day.

That last student, concluded the researcher, was obviously an outlier. The outlier threw off the results of the analysis, even when other elements were factored in, such as the potential impact of a higher tuition leading to more stress, and increased coffee consumption.

Outliers impact data results, and the actions we take based on them, significantly. The presence of outliers must be dealt with and we’ll briefly discuss some of the ways these issues are best handled in order to ensure marketers are targeting the right individuals based on what their data set analysis says.

I’d like to emphasize that the just because a value appears to be anomalous does not imply it should be avoided in a subsequent analysis. It all depends on how you intend to use the data’s results. Take the example of a data file that has few, but some individuals, that are very old, and a much larger proportion of under 40 year old customers.

Are these ‘older’ records incorrect? Probably not. The fact is that there just aren’t that many people that reach this age. So including these customers may very well be appropriate as a snapshot of all the individuals making up a data set as a reflection of demographics. However, from a marketing point of view, there needs to be a more considered approach to outliers and what they mean for marketing efforts.

Further Analysis

Here is an example: you’re in the business of renting videos and foreign television programs. While most customers rent a variety of products during a typical month, there are few that rent both videos and foreign television programs. Revenue from these select customers far exceeds the average. These customers are anomalies, data outliers, but good ones insofar as the video rental and foreign TV program business is concerned. A good marketer would study these further. If we were to develop an attrition model, for example, it may be unwise to include these anomalies, as their incorporation into such a model might mislead the manager into targeting incorrect potential attritors. Outliers can tell us a lot about our target markets but their presence tells us from the outset to take care when dealing with data. It is well worth carefully looking at what the data says as well as what is in the data file.

We know that outliers impact results significantly, and we know we have to understand this. Understanding as well how outliers arise is important as well. They can emerge from several different sources. Researchers have categorized outliers into two major classes: those arising from data error and those surfacing from natural variability of the data. They occur naturally, as there are almost always atypical observations. While average income may hover around $50,000, there is certainly a small percentage, 1% or so, whose income exceeds 10 times that amount or $500,000.

On the other hand, errors can also be responsible for the presence of outliers. A competent analyst will reflect on the variety of sources that may be contributing to the presence of outliers. The action needed to remedy the problem depends on the cause of the problem.

Human error in recording and entering data is often a culprit. ‘1.0’ can easily be misrepresented as ‘100.0’ . Proper data auditing should be done in order to eliminate these inaccuracies. If these types of outliers cannot be rectified, it would be better to remove the data point, as they are not typical of the universe.

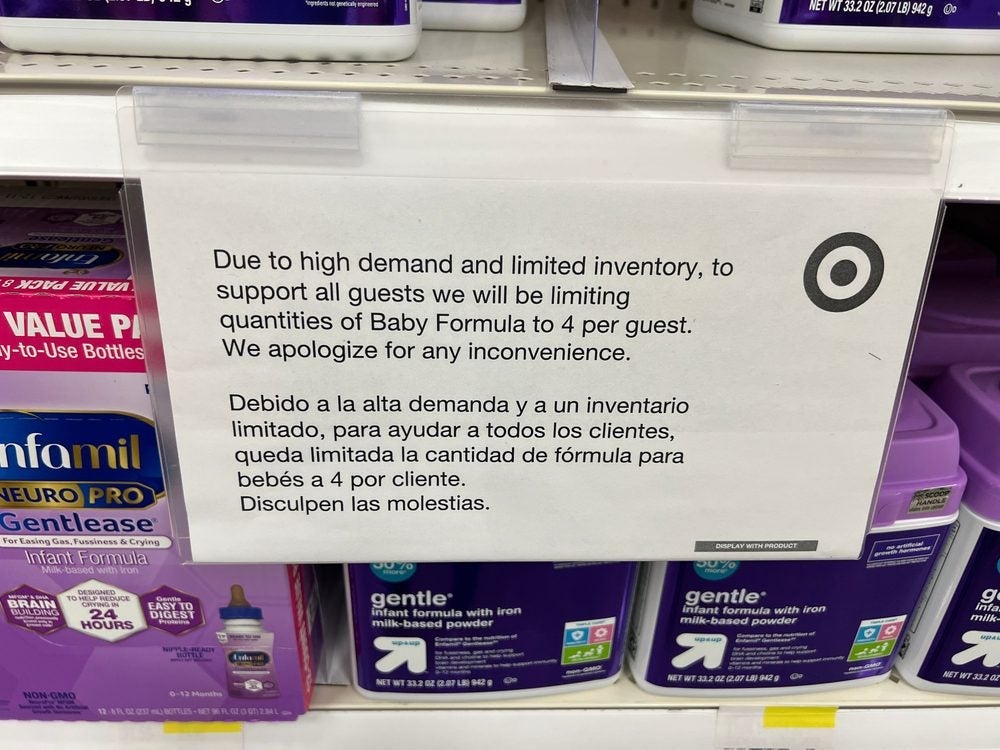

Have you ever responded to a survey and felt that it might be better to report what the researcher wants to hear, or perhaps due to social pressure, a bogus response might be in order. Or perhaps, you want to report additional income on a questionnaire, as you feel uncomfortable reporting your actual earnings. This sort of thinking and behavior – common with human respondents — can result in outliers. In our college tuition example, if accidentally some public colleges got included in the survey that could lead to outliers, as well. Inappropriate sampling is a common cause for anomalous events.

Researchers also cite unusual circumstances as a cause for outliers. For example, analysis including respondents from Newtown, CT school about any topic last December may have evoked responses not indicative of individuals’ true feelings. Outliers may result.

Having said all of the above, it is quite possible that an outlier can be an authentic data point. With increasing large samples, ‘big data’, as it is referred to, the chances of observing an outlier rises as well. So how does one detect outliers? Are there any formal guidelines? There are a few general rules.

The ‘no brainer’ method is to visually examine data. Scanning down a data column or generating a graph of data points should be adequate in identifying the outlier.

A basic notion in statistics is that essentially all data values lie with three standard deviations of the mean. Any value exceeding this threshold can be considered an outlier. An obvious shortcoming of this method is that the calculation of a three standard deviation threshold includes the potential outliers you are attempting to eliminate! But nevertheless, it is widely employed.

Yet other statisticians employ the IQR, the interquartile range. This is easiest understood with an annotated example concerning a sample of respondent’s investments.

| STEP | ACTION | RESULT |

| 1 | Secure and sort data | INVESTMENTS |

| 20000 | ||

| 20000 | ||

| 30000 | ||

| 30000 | ||

| 40000 | ||

| 50000 | ||

| 50000 | ||

| 150000 | ||

| 2 | Find median | |

| Half the data points are larger and half are smaller. | ||

| Mid points are 30000 and 40000,so the median is (30000+40000)/ 2 | 35000 | |

| 3 | Find the upper quartile, Q2; this is the data point at which 25 % | 50000 |

| of the data are larger | ||

| 4 | Find the lower quartile, Q2; this is the data point at which 25 % | 30000 |

| of the data are lower | ||

| 5 | Subtract the lower quartile from the higher quartile | 20000 |

| This is called the interquartile range, IQR | ||

| 6 | Multiply the interquartile range by 1.5 | 30000 |

| 6a | Add this to the upper quartile:50000+30000 | 80000 |

| 6b | Subtract this from the lower quartile:30000-30000 | 0 |

| 7 | Any value greater than 6a or less than 6b are MILD outliers | 150000 is a mild outlier |

| 8 | Multiply the interquartile range by 3 | 60000 |

| 8a | Add this to the upper quartile:50000+60000 | 110000 |

| 8b | Subtract this from the lower quartile:30000-60000 | -30000 |

| 9 | Any value greater than 8a or less than 8b are EXTREME | 150000 is an EXTREME outlier |

Most analytic software contains routines that accomplish the above very quickly, and is a frequently used procedure.

So now that you know outliers exist in your data, how do you deal with them?

There is a school of thought that maintains that in many cases leaving good enough alone is the rule. That is, leaving the outlier in will not damage the analysis. Tree technologies, for example, bundle observations together into a node, so these outliers would fall into one of these nodes.

Others claim that applying a transformation to the data will convert the outlier to a more acceptable value. Typically, a log transformation is employed, but other recodes can do a good job, as well.

Similarly, the analyst can categorize or bin values so that the outlier would fall into its own bin. This works quite nicely, and binning algorithms can help optimize the categories that are selected.

An approach favored by many investigators is referred to as ‘trimming the mean’. Here the top and bottom X% of a variable’s values are removed from further analysis. Typically the data is sorted and the top 2, 3% of values are eliminated. Alternatively, these top values can be replaced by the next highest value. So, for example, consider the following data:

35,39,52,61,69,79,91,104,296,401. Here we could eliminate 296 and 401 from further consideration, or replace these two highest values with 104. The trimmed mean is a convenient approach because it is less sensitive to outliers the original mean and will provide a reasonable estimate for many statistical analyses.

Deleting the observation is also a method preferred by some. While this may be helpful when negotiating huge data sets, a market researcher dealing with only a small number of respondents may find this approach less palatable. If the researcher identified the outlier as being a bogus observation, then this method seems more appropriate.

It’s not always straightforward dealing with the challenges that outliers present. What should never be overlooked is the potential goldmine that outlier analysis can offer the manager. I recall a rather comprehensive analysis I was involved in that dealt with churn, which included market research as well as database analysis. There were six outstanding customers that had attrited. It wasn’t clear why. Indeed on the attrition model they scored quite low — a sign that they were loyal. Upon further investigation, it was determined that six of these previously high value customers had spoken to the same customer service associate via the toll free number. It looks like this employee was not doing a very effective in her job.

In order to complete an effective study, the analyst should examine outliers carefully. Often they include critical information about the process, or the data gathering and capture practices. Prior to contemplating the possible removal of outliers from data, a manager should try to recognize why they appeared.

Sam Koslowsky is vice president of modeling solutions at Harte-Hanks Inc.

Network

Network