More and more marketers are adding artificial intelligence (AI) to their toolbox of late, and with good reason. AI promises significant automation of workflows and intellectual processes, and to a great extent, it delivers on that promise. However, it’s crucial that marketers not lose sight of the fact that AI is not magical or omnipotent.

The algorithms behind AI are beholden to the integrity—or lack thereof—in the measurements and datasets used to train them. If a model is trained to predict future states of the market, the data it is built with must be representative of that market. Unfortunately, this is often not the case. As a result, algorithms risk becoming as biased as their constituent data sets. Left unaddressed, such errors and inconsistencies can cascade through an entire marketing strategy and severely hobble its performance. Among the numerous potential pitfalls to monitor, AI bias often remains the most undetectable, and therefore, dangerous. The best way to mitigate this bias is by maintaining the right level of human interaction throughout the marketing chain while applying healthy scrutiny to data sources.

The Danger of Relying on Internal Data

Bias issues increase exponentially when marketers rely primarily on their own data to train their algorithms. This is increasingly common across many industries (due at least in part to growing privacy restrictions) and is particularly evident in the movie industry.

Organizations that assume their own data is somehow representative of the market as a whole are making a big mistake. Their AI algorithms are heavily biased toward what they have done in the past. Ironically, young companies are at the greatest disadvantage, as they have the smallest data pools, despite the best of data-driven intentions.

The hard truth is, algorithms are unable to imagine a future that is different from the past. Nowhere is that more clearly evident than in box-office prediction models. While there is technically no limit to their variety, there are three approaches that are often taken when developing such forecasting algorithms. For each, accuracy depends on the quality of the data made available to them.

Historical Box Office Models

Marketers don’t have access to paid engagement data or tracking data (surveys measuring awareness and intent) prior to eight weeks out from the film’s release. As a result, early-phase box office models rely largely on historical databases such as IMDB and Box Office Mojo. The algorithm’s understanding of the film’s prospective viewers is based entirely on how similar releases have fared in the past. It matches metadata points like cast, director, rating and synopsis to historical analogs, crunches the numbers, and projects how well the new release will do. Not surprisingly, sequels and franchise installments tend to get glowing projections.

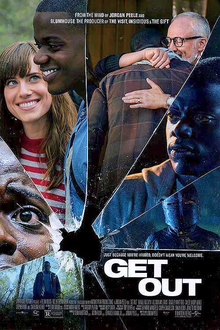

The issue is that, historically, Hollywood has been biased toward white, male films and not very representative of minorities. That built-in bias prevents early-phase algorithms from recognizing the tremendous value in unprecedented films such as “Get Out,” Jordan Peele’s directorial debut, which , which turned out to be the 10th-most-profitable film of 2017.

More broadly, if studios are relying on these algorithms to greenlight films—before even getting into the marketing and distribution—AI becomes a blocker to new and different kinds of content being produced. Producers can say their decisions to greenlight sequels and franchises are data-based, and they are, but the data is biased by historical box office performance.

Paid Media Engagement Models

As a film’s release date becomes visible on the horizon, marketers begin serving media on various paid digital platforms, typically beginning with a trailer drop and a few unique spots. User engagement with said media then begins to generate measurable data, which accrues to indicate absolute brand health as well as relative interest levels between different audiences. A media engagement-driven algorithm uses an understanding of how paid engagement patterns correlated with box office performance for past films, and it identifies similar patterns in the upcoming release. Bias is an issue here because different audiences engage with platforms in different ways.

You May Also Enjoy:

- The AI Paradox: Why More Automation Means We Need More Humanity

- Integrating AI Into Your Marketing Strategy: Six Steps

- AI Helps KPMG Realign Digital Marketing Focus

If your algorithm uses a metric biased toward one audience to predict the box office, you will be blind to signals from other audiences that might engage in different ways. It will provide false signals. Similarly, certain ages, genders, or ethnicities that don’t use (or overuse) social media won’t be taken into account, despite the fact that they may be hand-raising elsewhere. The model will often over-predict for movies that skew toward younger demographics and underpredict ones that skew older.

Survey Tracking Models

As a film’s release draws near, analysts can gain access to industry-standard survey panel reports that track audience awareness and intent. This tracking information offers data scientists a rich data set with which to train algorithms for predicting box office success.

Once again, bias can be a stumbling block. If the composition of the panel or the survey being used values certain demographics over others, it may reach faulty conclusions. The sample for surveys is not always representative of the general market audience, even in the best circumstances. For instance, the very fact that surveys are often delivered online means that the sample is skewed toward people with spare time to fill out surveys, or those in search of compensation for their time and opinions.

Similarly, algorithms must make judgments about the relevant value of different measured attributes, i.e., aided awareness, interest, etc., which may not hold true equally across all audiences. For example, if word-of-mouth promotion disproportionately drives audience turnout for certain audiences, their lifetime value (LTV) may not be taken into account by the model.

Human Involvement is Always Needed

Marketers can reduce their vulnerability to AI bias somewhat by relying on as many different, complementary models as possible. Combining multiple inputs results in much richer insights. In addition, it’s important for companies to be conscious of where they are sourcing their data from, selecting against misrepresentative or inapplicable data sets.

The single most important thing marketing organizations can do to mitigate the negative impact of AI bias is to constantly maintain human involvement. That may sound self-evident, but it’s lacking in a surprisingly large number of cases. The output of the model is not the end-all and be-all, and it should not serve as the foundation on which deterministic decision-making processes are built. AI is first and foremost artificial, and its value begins and ends with the input of both human creativity and critique.

Alex Nunnelly is senior director of analytics at Panoramic.

Network

Network